R-Shiny is an excellent framework to create interactive dashboards for data scientists with no extensive web development experience. Similar technologies in other languages include the Flask, Dash or Streamlit Python frameworks. Bringing all different Dashboards under the hood including unified authentication and user management can be a challenging task. In this blog series we will show how we’ve implemented such a framework with AWS.

Use Case and Requirements

The dashboard framework was created for the research department of a major financial institution. Analysts and data scientists had already created dashboards covering different topics based on numerous technologies including R-Shiny and Python-Flask. However, a secure and unified user authentication mechanism is crucial to put the dashboards into production and restrict access only to selected users. Additionally, most analysts and data scientists do not have much dev-ops experience such as Docker containers and thus need an easy and automated way to adapt their existing dashboards. Last but not least, the team head count was limited on the system operations side, so a simple solution with low maintenance was needed. The entire solution needed to be implemented through Amazon Web Services (AWS) as the cloud provider of choice.

Based on this situation we were asked to create a dashboard framework architecture with these requirements in mind:

- Secure, end-to-end encrypted (SSL, TLS) access to dashboards.

- Secure authentication through E-mail and Single-Sign-On (SSO).

- Horizontal scalability of dashboards according to usage, fail-safe.

- Easy adaptability by analysts through automation and continuous integration (CI/CD).

- Easy maintenance and extensibility for system operators.

System Architecture

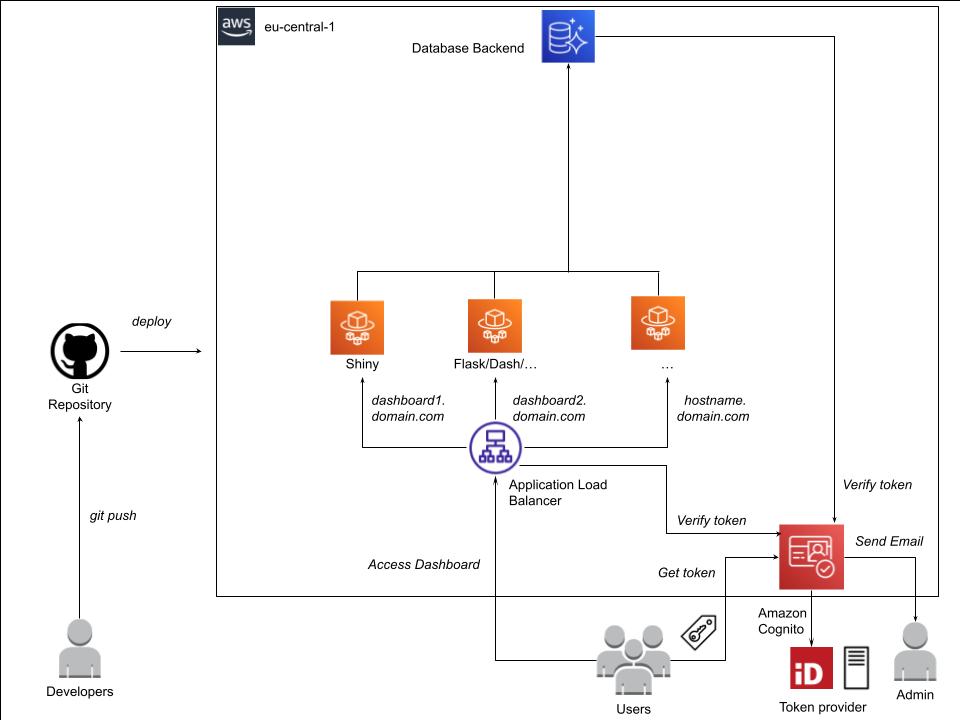

All considerations above led to a simple yet effective system architecture based on selected managed AWS services including

- Application Load Balancer (ALB) to handle secure end-to-end (SSL) encrypted access to the dashboards based on different host names (host-based-routing).

- AWS Cognito for user authentication based on E-mail and SSO through Ping Federate.

- AWS Fargate for horizontal scalability, fail-safe operations and easy maintenance.

- AWS Codepipeline and Codebuild for automated build of dashboard Docker containers.

- Extensive usage of managed services requiring low maintenance (Fargate, Cognito, ALB) and Amazon Cloud Development Kit (CDK) to define and manage infrastructure-as-code managed in Git and deployed via Code Pipelines.

The figure below illustrates the resulting architecture in more detail:

1. Application Load Balancer

A central piece of the system architecture is the Application Load Balancer (ALB) to route traffic securely to each dashboard. We configured the ALB with host-based routing, so that requests to e.g. https://dashboard1.domain.com or https://dashboard2.domain.com are routed to the respective dashboards. The ALB handles SSL-offloading so that all communication between clients and the load balancer is end-to-end SSL or TLS encrypted. Additionally, we use a feature of ALB to authenticate users through a OIDC compliant identity provider, such as Amazon Cognito. Thus, all users without an authentication token are redirected to a login page, as provided by a Cognito Hosted UI. After successful authentication users are allowed to access the respective dashboard of choice.

2. AWS Cognito for User Authentication

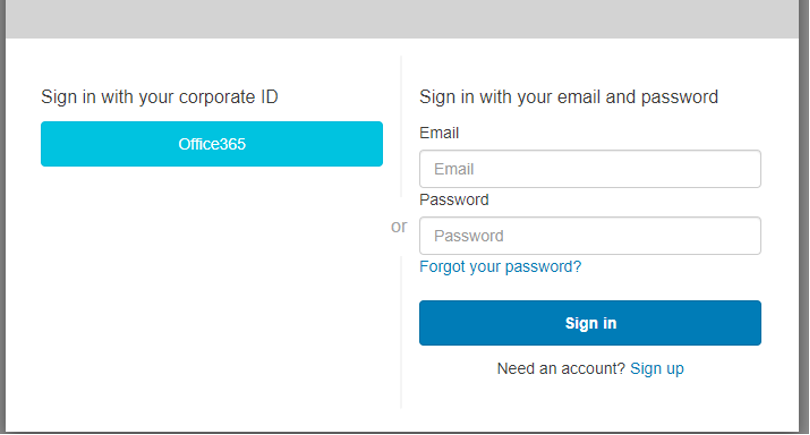

We used Cognito as a managed identity provider by AWS supporting all important authentication mechanisms like e-mail/password (plus MFA) and federation providers like Google, Facebook or Apple. Most importantly, Cognito also supports SAML providers like Ping Federate for SSO within large corporations. The login form is also hosted by Cognito and presented to users who have not yet logged into any dashboard:

3. AWS Fargate

All Dashboards are running within Docker containers and are hosted as Fargate Tasks within a common cluster. This makes it possible to create dashboards independently from each other including different versions of R (or Python), packages and even operating systems. The pricing of Fargate tasks is comparable to EC2 depending on the CPU/Memory configuration but comes with the advantage of being completely managed. This also makes auto-scaling a breeze by adding new tasks depending on the current workload.

4. Code Pipeline

Time to market is essential in many industries in order to inform the customer about adjustments and new features as fast as possible. Additionally, many dashboard developers do not want to be preoccupied with dev-ops tasks like Docker containers and bash scripting. By using Code Pipeline we make sure that dashboard developers only need to push changes to the repository—the pipeline builds the docker container, pushes it to elastic container registry (ECR) and subsequently deploys the new container to the cluster using Code Deploy. The deployment ensures that users have a seamless experience by redirecting new sessions to new instances and dropping old instances once no more open sessions are left.

5. CDK and CI/CD

The AWS Cloud Development Kit (CDK) was a very important tool to quickly setup the entire stack including infrastructure components, build pipelines, and even domain entries. Typescript was our language of choice since

- It provides the best support by the community (followed by Python) and

- CDK is also written in Typescript which makes debugging much easier.

Since CDK code gets synthesized to common AWS Cloud Formation Templates through the command cdk synth developers get immediate feedback if something went wrong and can shorten the feedback cycle. Through cdk deploy the template can be uploaded and deployed as Cloud Formation Stacks. Thanks to the infrastructure as code principle it is very easy to track changes in Git version control and upload stacks to multiple accounts for development/staging/production.

Conclusion

We hope you could gain insight into the system architecture to deploy a simple yet powerful dashboard framework within Amazon Web Services. The presented framework fulfilled all security requirements and is requires low maintenance efforts thanks to many integrated managed AWS services. In the next post we will show how such a framework can be built from scratch using CDK including dashboard templates for R-Shiny, Flask, Dash and Streamlit.

Stay tuned! ✌️

Get in Touch

Interested in creating your own dashboard framework or other data science cloud stacks? Just get in touch:

E-Mail: info@quantargo.com Contact Form: Link